The easiest way to approach the A/B testing process is to break it down into three phases: Planning, Testing, and Results.

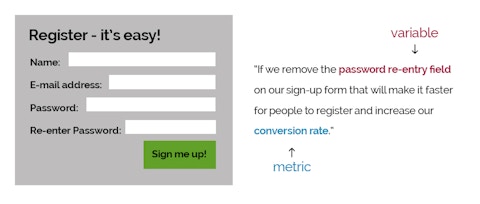

Identify your hypothesis. Every test needs to start with a hypothesis. Write a detailed sentence that includes the variable to be tested and the metric that will be used to prove or disprove the success of that variable.

Example hypothesis: "If we remove the password re-entry field on our sign-up form that will make it faster for people to register and increase our conversion rate."

Plan ahead to accurately gather your data. Knowing what tools to use to gather the most usable data is very important. Do you need additional tracking software? Are you tracking everything you can in Google Analytics, or can you get more out of it?

There are a lot of resources (even some free ones like Google Experiments) available that can help you plan and gather usable data from your tests.

Know your timeline. Testing for too short of a time period can give you unreliable results, so it's important to know how long your test should last. There are some pretty great tools out there, including this one by Visual Website Optimizer that will help you decide how long your test should be.

Keep it simple. If you're new to testing, make sure that you stick to only testing two options at a time - it's much easier to interpret your results that way.

We've done both A/B and multivariate (multiple options at once) testing, and we can confidently say that it is much easier to attribute success to a specific change if you only test two options at a time.

Test at the same time. This might sound like a no-brainer, but it is super important to make sure that you are testing your two options at the same time. This way you avoid skewed results due to timing.

If you start testing one variation on a Sunday and the other variation on a Monday, you may get different results because some event on that Sunday might have an effect on the day's results that wouldn't be included in the other test.

Know when you have enough data. This is probably the scariest part of A/B testing. How much data do you need in order to confidently make your decision? Making a decision based on too small of a data set can harm you in the long run.

Thankfully for those of us who are not statistical geniuses, there are online tools like Split Tester and Split Test Analyzer that help you figure out if your tests are giving enough of a result to warrant a permanent change.

Don't take data at face value. If you want a hypothesis to be correct, it can be easy to interpret data in a way that gives you the answer you want.

It's important to make sure that you look at the results from all angles. If one iteration gets you more clicks that might look like the winner of the test at first glance, but if the other iteration gets you moreconversions from fewer clicks then it is actually the winner.

Remember, you're not just looking for a higher quantity of response - you're looking for quality of response.

Keep testing! One test is never enough. After testing two ideas, take that winner and test it against a second variation to see if you can improve further.

We did this at Venveo with a set of Twitter ads for a mobile app called Mail Pilot. We started with two different ad images: a woman on a computer and a screenshot of the app.

After the screenshot clearly outperformed the human image, we decided to try a second test of screenshots on an iPhone and an iPad to see if we could improve on our previous results, and we did!

The best part was that Mail Pilot reported they beat their sales projections by 18% due to our changes.

Step away from the computer! Your customers aren't merely numbers - data is a reflection of your human users.

You have a wealth of information in every one of your customers. Invite a couple of users to your office for an informal focus group, or take a customer out for coffee and get to know what is important to them.

Data is a reflection of your human users.

Use these personal interactions to inform your hypotheses and then gather further data from A/B testing.

If you have a question or would like to share your own A/B testing experiences, please leave a comment below- we'd love to hear from you!